Smartphones are starting to really challenge dedicated cameras:

Huawei P20 Pro vs Canon 5DS R: I’m Stunned… (petapixle.com)

and

Why smartphone cameras are blowing our minds (dpreview.com)

Photography and Camera Related

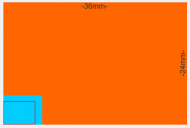

Photography and Camera Relatedsee the blue there -- that's the size of the Huawei sensor. It's bigger than the iPhoneX sensor (shown with red outline), but still miniscule compared to the full frame sensor shown in orange. Yet it get's favourably compared with a 50MP full frame Canon 5DS. Does even better in low light: where the Canon is unable to focus there's so little light, the Huawei has no problem getting a picture.

Part of the reason for this is that the smartphone actually has three sensors with individual lenses: 40MP, a 20MP monochrome sensor, and an 8MP one with zoom lens. So the final image is a composite of images from (at least two of) those sensors. While it does involve better hardware, a lot of the magic is in the 'jpeg engine', the software that produces the final jpeg -- which corrects unwanted lens effects and combines the images from the various sensors. This is nothing new, but in smartphones it's being taken to a new level of efficiency.

The Google Pixel 2 XL has a different trick

It's constantly keeping the last 9 frames it shot in memory, so when you press the shutter it can grab them, break each into many square 'tiles', align them all, and then average them. Breaking each image into small tiles allows for alignment despite photographer or subject movement by ignoring moving elements, discarding blurred elements in some shots, or re-aligning subjects that have moved from frame to frame. Averaging simulates the effects of shooting with a larger sensor by 'evening out' noise.-dpreview

(my emphasis)

Sunrise at Banff, with Mt. Rundle in the background. Shot on Pixel 2 with one button press. I also shot this with my Sony a7R II full-frame camera, but that required a 4-stop reverse graduated neutral density ('Daryl Benson') filter, and a dynamic range compensation mode (DRO Lv5) to get a usable image. While the resulting image from the Sony was head-and-shoulders above this one at 100%, I got this image from a device in my pocket by just pointing and shooting.-dpreview

(my emphasis)That's a stunning picture with really good exposure under difficult conditions.

Have to admit I dont even know what the

"4-stop reverse graduated neutral density ('Daryl Benson') filter" required for the 'real' camera is, never mind the

"dynamic range compensation mode", but it all sounds very complicated.

Another aspect of 'traditional' photography that is now being very succesfully done via the software engine is creating bokeh (background blur), using the ability to recognise depth in the image. Bokeh happens a lot more with larger sensors, especially when using lenses with wide-open aperatures. Can be a PITA though to manage: if you're very close to your subject, or are using a long zoom, the area in focus (depth of field) can be so small as to be problematic. The nose is in focus but the eyes aren't :-/ Smartphone software is now starting to successfully create an artifical bokeh -- the smaller sensor of the smartphone has a very large (deep?) depth of field (area in focus), the software can then choose to blur what it wants, or better, what you want, keeping the rest in focus.

the beauty of computational approaches: while F1.2 lenses can usually only keep one eye in focus—much less the nose or the ear—computational approaches allow you to choose how much you wish to keep in focus even if you wish to blur the rest of the scene to a degree where traditional optics wouldn't allow for much of your subject to remain in focus.-dpreview

disclaimer: I'm not an expert, but tried here to distill two recent articles about smartphone cameras, if you notice any mistakes, do let me know,

thanks, Tom