Here is a related question for those of you who know....Assuming you are not gaming or doing any high-quality artwork, is there any reason not to use onboard video?-steeladept

First of all, your assumption is wrong. I'm a gamer.

Second of all there is no such thing as "Onboard Video" for the motherboards that

maximize the efficiency allow overclocking of the CPU I'm getting.

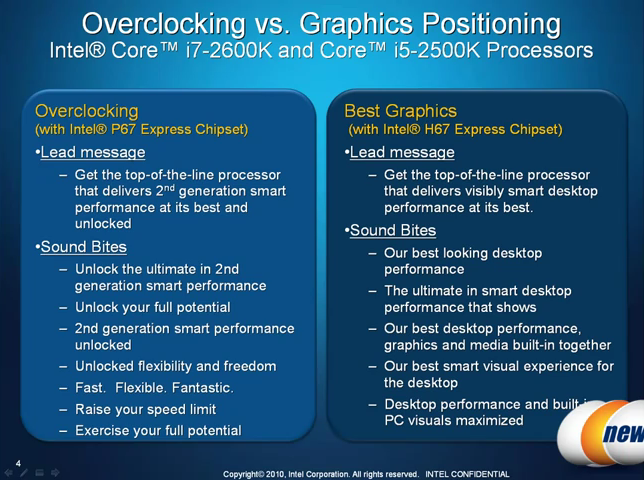

IIRC, there are P67 and H67 motherboards for these CPUs, the H67 series enables the CPU's video abilities (Intel HD 3000) and the P67 series unlocks the CPU's overclocking abilities, but disables the built in video capabilities. Or something.

Anyway, the point is that I wanted to overclock so I'm getting a P67 series motherboard, so I'd need a discreet GPU anyway.

But I think you're right, and most people would be happy with the H67 mobo that has video stuff without a discreet GPU.

Here's a video detailing the features of these CPUs:

EDIT: added screenshot of slide seen at about 6:11 in the video above:

EDIT 2: I just realized that your question wasn't directed at me, but that it was a question in general about whether or not onboard video would be good enough in the scenario you described.