Wow, I can't believe that you simply could do that! Thanks, I'll look into this database and see what I can excerpt from it.

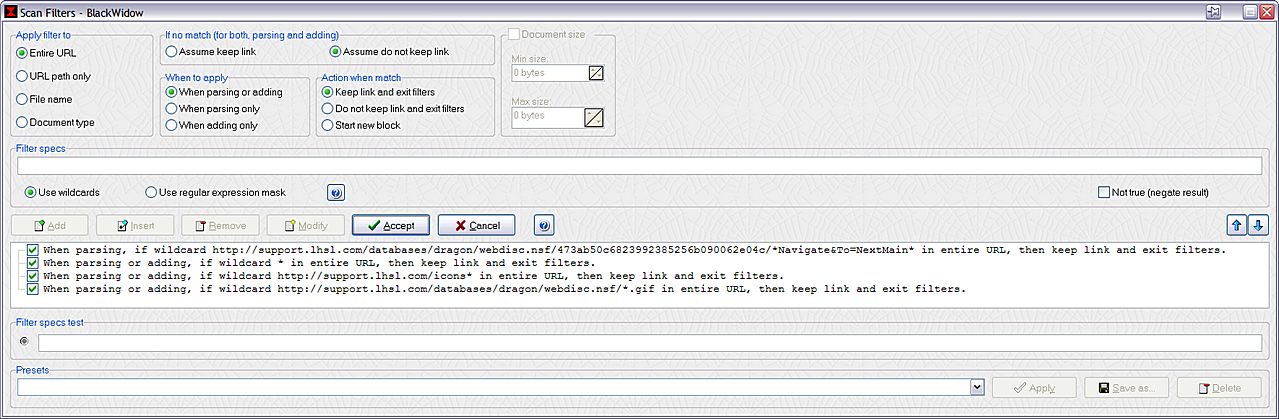

Otherwise, what I have found out is that regarding "spidering intelligence", the software Blackwidow is one of the best, since you can specify

a) which pages are

scanned for your wanted links only; and

b) which pages are actually

downloaded.

This way, Blackwidow actually manages to crawl from posting to posting in that forum, downloading only the posts and ignoring EVERYTHING else on the website. It also manages not to download every posting multiple times.

Blackwidow however seems to have a hard time to rewrite the html code such that it actually becomes browsable offline

The latter however is what the program WinHttrack does marvelously. WinHttrack unfortunately has the drawback that it doesn't have such sophisticated filter settings as Blackwidow. With WinHttrack, you can't differentiate between pages that are

only scanned for links and pages that are downloaded. Therefore, WinHttrack (that does a beautiful job in converting the pages for offline browsing) ends up downloading much more than what you actually are after.

Thanks everyone,

David.P

PS: Filter settings in Blackwidow: